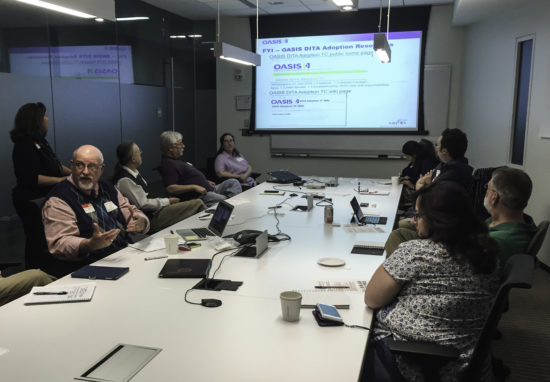

My work for IXIASOFT includes being a company liaison for OASIS, the open standards body that produces the DITA specification. One of the groups I actively work with there is the DITA Adoption Committee, that I now chair, accompanied by Stan Doherty as secretary. Its goals are to help inform the DITA community on best practices and to provide general information on how to use DITA by publishing numerous white papers on various subjects concerning DITA. Shortly after the official launch of the DITA 1.3 specification (which comes from the separate DITA Technical Committee, Stan Doherty asked whether this might be a good time to gauge the pulse of the DITA community as a whole before forging ahead in earnest with future DITA 2.0 development. Out of this came the idea for the “DITA Listening Sessions” where the intent was to gather DITA practitioners together and discover their attitudes about the current state of DITA. Stan is a resident of Boston, and since he was the driving force behind the project, all of the sessions he hosted were in his city. Subsequently there has been one additional session in Denver, and there are tentative plans to hold future sessions in Silicon Valley, Austin, Toronto and Seattle. IXIASOFT has a vested interest in understanding the concerns and issues facing DITA users in order to get a better sense as to where future development efforts should go, and so I was able to attend these sessions and aide Stanley as an OASIS DITA representative.

The three Boston-area sessions included one held in Cambridge, the second in Littleton, and the third in Marlborough. A fourth session was held in Denver, Colorado. Over 65 people came to all of these sessions, representing companies like VCE, Progress Software, Xilinx, Fidelity Investments, IBM, Oracle and more. The attendees came with varying levels of experience, ranging from those who simply wanted to find out more about DITA, to those with a couple of year’s worth of experience, to those who had very mature processes and a deep knowledge of the XML standard. In addition to Stanley and myself, other members of the OASIS DITA committees were able to attend remotely, including Bob Thomas, Don Day, Joe Storbeck and prior her retirement, JoAnn Hackos. We had several set questions for the people attending, asking what has worked and not worked well, but after that the sessions were open to let people express their opinions and experiences with DITA. We were there to listen.

Confusion Between the Relationship Between DITA and the DITA-OT

The majority of the people who attended the listening sessions were using DITA to write content and using the DITA Open Toolkit (DITA-OT) to process document outputs. Several people expressed the desire for more capabilities from the DITA-OT, the most common requests being for more robust HTML5 output and for an easier way to craft the XSL that formats the content. Another repeated request was for more information on the inner workings of the DITA-OT, in order to make its operation easier to troubleshoot.

After several people expressed their opinions about the DITA-OT and what they wanted to see in future versions, it became clear that there was confusion about the relationship between the DITA standard from OASIS and the DITA-OT open source development project. The DITA-OT has become the de facto way to output content written using the DITA standard, and for many people the two naturally go hand-in-hand. In fact neither the OASIS DITA Adoption Committee nor the OASIS DITA Technical Committee controls the work done by the DITA-OT open source project. This distinction was far from clear to many of those attending the sessions, even for those who have been using DITA and the DITA-OT for years.

As a result of this feedback, the DITA Technical Committee is authoring a committee note outlining the distinction between the OASIS organization that crafts the DITA standard and their relationship to the DITA-OT open source project. There’s also a blog post from DITA-OT developer Robert Anderson on the same subject, which is well worth a read.

For those who are looking to influence the direction of DITA-OT development, the best solution is to contribute to the development process, comment on any documentation issues you discover, or look at the list of known issues to see if your pet peeve is already being worked on. Anything you can do to help the small but feisty DITA-OT development team can improve things for the whole of the DITA community.

Migrating from DITA Conrefs to Keys is a Real Problem for Many

A fervent wish expressed by several people coming from technical documentation groups that have been using DITA for years was the desire to move to a DITA 1.2 key-based way of sharing content from their existing conref-based processes that were originally created. They are sold on the idea of keys in their various forms (keys, keydefs, and conkeyrefs from DITA 1.2) as this referencing mechanism offers a more straightforward way of managing reusable content for things like product names, warehousing images and icons (i.e. “resource keys”), commonly-referenced website xrefs, topics (i.e. “navigation keys”) and more. The way keys are created prevents the potential “spaghetti conref” issue that can arise if conrefed content is itself conrefed. (If you are looking for a demonstration of the versatility of DITA keys, download the excellent example Thunderbird documentation set available from GitHub). Keys also offer an opportunity to simplify conditionalized content.

So what’s stopping these mature DITA implementations from moving forward? Based on the responses from the attendees, there are three main reasons for this:

- It took a long time for some DITA software tools to implement keys

- The amount of legacy DITA content based on conref reuse methods

- Relative lack of information as to the process utility of keys.

For those who have been using DITA for any length of time prior to the release of DITA 1.2, much of their content reuse below the topic level has been accomplished by using conrefs. It has become common practice at many firms to use this content with conditions in order to produce related but different document deliverables. Keys can also be used to drastically reduce the amount of conditional markup in terms of selection attributes within publications. An example of when keys could replace conditions is when there are two or more adjacent elements with conditions that are mutually exclusive. This means that exactly one of the adjacent elements will be included while the others will be excluded. In those cases, you can have a single key that resolves differently for each scenario. A combination of keys and conkeyrefs can turn a topic into a “template” that uses keys to generate product specific content that varies only slightly from one product to the next. As complex as conrefs can get, when used with conditional markup it can makes things significantly more complex. DITA 1.2 keys mechanisms offer a way out of this quagmire of complexity. But the larger the amount of legacy conrefed content, the bigger the problem: I found it telling that one of the people who wanted to use conkeyrefs in their current implementation but couldn’t, due to legacy DITA content issues, was someone who worked at IBM.

Despite the fact that keys were introduced over five years ago with the launch of the DITA 1.2 specification, there was a significant lag in the adoption of keys by some tool vendors. Depending on the CCMS or the editor being used, the implementation of keys has been late in coming. There was also no guidance from the DITA Technical Committee or the DITA Adoption Committee as to how to effectively migrate from conrefs to keys, or of any advantage this migration would convey.

While it was clear that some people at the sessions were aware of the utility of keys over conrefs, that knowledge is not pervasive. I’ve outlined some of the advantages here, but this knowledge has come from personal experience—there’s next to no information out there that specifically addresses the advantages of a key-based approach to content reuse. Several people at the listening sessions remarked that they are interested in using keys, but did not have enough information on what they could do and why they were an improvement. The focus of early articles on the subject of keys was on the mechanics of using them: there was little information as to the benefits of keys from a process standpoint. This is something that needs to be addressed; all I can say at the moment is: stay tuned!

In the next post: the desire to build community, the concern that DITA is not meeting current needs for some, and the level of interest in Lightweight DITA.

There are currently tentative plans to hold future listening sessions in Silicon Valley, Seattle, Toronto, and possibly Kitchener/Waterloo. If you are interested in attending or can help arrange a venue for a DITA Listening Session in your city, please reach out to me at: keith.roberts@ixiasoft.com.

Thanks also goes out to Bob Thomas for his review and suggestions relating to this piece.